DP Infrastructure

Incorporating support for differential privacy into data processing infrastructure.

Vision

We are a group of systems and security researchers at Columbia University and the University of British Columbia building infrastructure systems for differential privacy (DP). We believe DP is essential in today’s data-driven world, where user data is continuously harvested to power analytics, machine learning, product improvements, and ad targeting.

Our central thesis is that user privacy is a critical computing resource—implicitly consumed across workloads, yet unlike CPU or RAM, it is neither monitored nor managed. We aim to change that by integrating privacy as a first-class resource into infrastructure systems, allowing it to be tracked, allocated, and conserved using familiar systems principles. DP gives us the formal foundation to define and control this privacy resource.

We’ve embedded this paradigm into:

- Kubernetes with PrivateKube & DPack, treating privacy as a schedulable resource

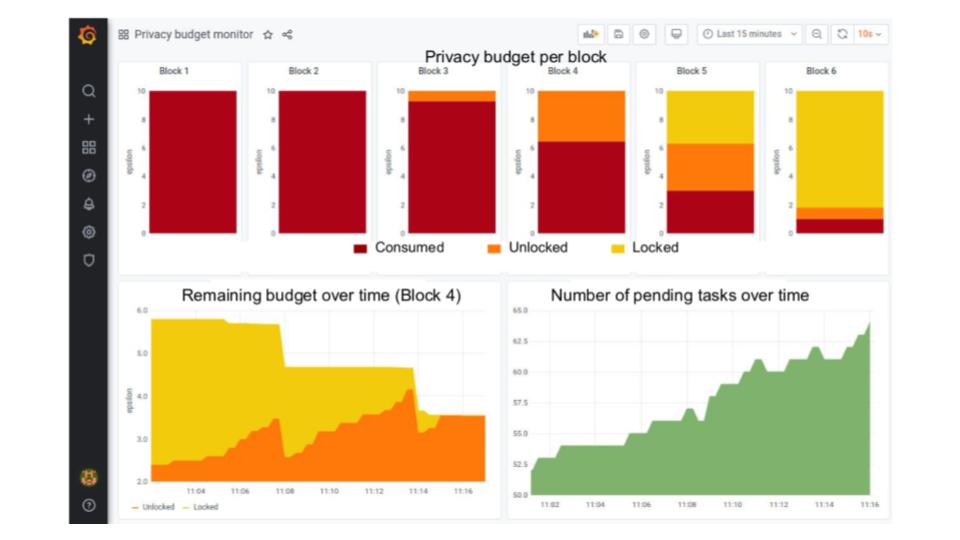

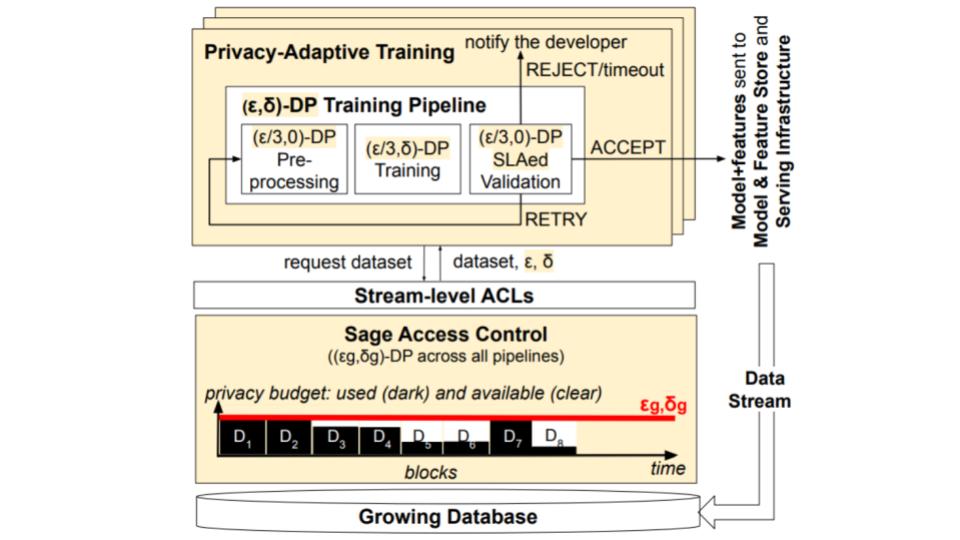

- TensorFlow Extended with Sage, integrating privacy into ML pipelines

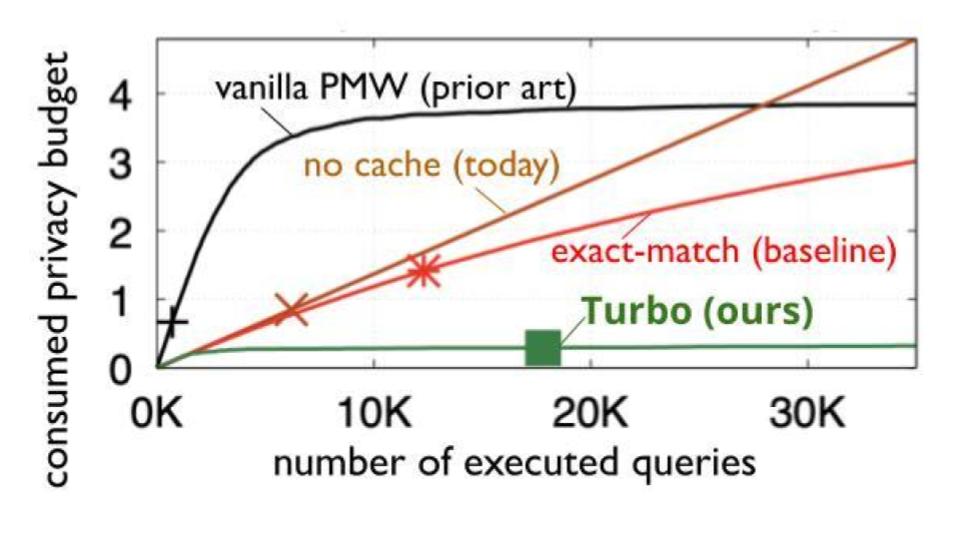

- Analytics caching systems with Turbo, reducing privacy budget usage via reuse

- Browser APIs for private advertising with Cookie Monster, now part of the W3C standardization process. Big Bird adds budget management and isolation to Cookie Monster.

Incorporating privacy as a resource has helped operationalize longstanding challenges in DP. For example, PrivateKube reframes the “privacy budget exhaustion” problem as a resource scheduling issue, enabling the use of established scheduling algorithms. Turbo shows how DP-aware caching can dramatically extend privacy budget lifetime. And follow-up from Cookie Monster (presently ongoing) shows that smart scheduling of this resource is vital to ensure resilience against exhaustion attacks in browser APIs for private advertising. These systems move DP closer to widespread deployment by grounding it in practical infrastructural support and veteran resource management approaches.